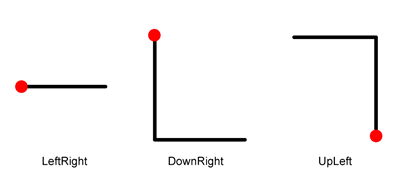

This example uses the SiGeR algorithm which needs a text descriptor for each gesture class. The Listing 1 shows how these gestures are created and grouped in a gesture set.

GestureClass leftRightLine = new GestureClass("LeftRight");

leftRightLine.addDescriptor(new TextDescriptor("E"));

GestureClass downRight = new GestureClass("DownRight");

downRight.addDescriptor(new TextDescriptor("S,E"));

GestureClass upLeft = new GestureClass("UpLeft");

upLeft.addDescriptor(new TextDescriptor("N,W"));

GestureSet gestureSet = new GestureSet("GestureSet");

gestureSet.addGestureClass(leftRightLine);

gestureSet.addGestureClass(upLeft);

gestureSet.addGestureClass(downRight);

In the next step, the Configuration object is created. The gesture set created before is added to the configuration and the SiGeR algorithm is set as shown in Listing 2 . With this configuration the Recogniser can be instantiated.

To capture the input of an appropriate device the InputDeviceClient is used. A list of devices has to be instantiated and the MouseReader is added. This allows drawing the gesture with the mouse while pressing the middle mouse button. After releasing the button, the gesture is recognised. To be able to react on this event, this example class has to implement the ButtonDeviceEventListener interface. These steps are shown in Listing 3 .

List>InputDevice< devices = new ArrayList>InputDevice<(); devices.add(new MouseReader()); client = new InputDeviceClient(devices); client.addButtonDeviceEventListener(this);

The method shown in Listing 4 is executed after releasing the mouse button. The Note is created with the data stored in the buffer of the InputDeviceClient which is cleared afterwards. With this Note the recognise method is called and the result is stored in the ResultSet . Depending on the result, the name of the classified gesture or `recognition failed' is printed on the console.

public void handleMouseUpEvent(InputDeviceEvent event) {

ResultSet result = recogniser.recognise(client.createNote(0, event.getTimestamp(), 70));

client.clearBuffer();

if(result.isEmpty()){

System.out.println("recognition failed");

}else{

System.out.println(result.getResult().getName());

}

}

Alternatively to defining the gesture set and classes programmatically it can be done in an XML file as illustrated in Listing 5 . This has the advantage that gestures can be defined independently of the source code and the instantiation of an algorithm is much shorter.

<configuration>

<algorithm name="org.ximtec.igesture.algorithm.siger.SigerRecogniser" />

<set name="gestureSet1" id="1">

<class name="LeftRight" id="2">

<textDescriptor><text>E</text></textDescriptor>

</class>

<class name="DownRight" id="3">

<textDescriptor><text>S,E</text></textDescriptor>

</class>

<class name="UpLeft" id="4">

<textDescriptor><text>N,W</text></textDescriptor>

</class>

</set>

</configuration>

If the configuration is done with an XML file, Listing 6 replaces Listing 1 and 2 . Note that semantically the two declaration are identical.

<iGestureBatch>

<algorithm name="org.ximtec.igesture.algorithm.signature.SignatureAlgorithm">

<parameter name="GRID_SIZE">

<for start="8" end="16" step="2" />

</parameter>

<parameter name="RASTER_SIZE">

<for start="120" end="240" step="10" />

</parameter>

<parameter name="DISTANCE_FUNCTION">

<sequence>

<value>org.ximtec.igesture.algorithm.signature.HammingDistance</value>

<value>org.ximtec.igesture.algorithm.signature.LevenshteinDistance</value>

</sequence>

</parameter>

<parameter name="MIN_DISTANCE">

<for start="1" end="5" step="1" />

</parameter>

</algorithm>

</iGestureBatch>

To initialise the Multi-modal Recogniser, a gesture set with composite gestures and an instance of a device manager should be passes as parameters. The latter is needed to be able to check which user performed which gesture. GestureHandlers can be added so the appropriate actions can be taken when a particular gesture has been recognised.

Next, a Multi-modal Manager has to be created which takes the Multi-modal Recogniser as input. Recognisers can be added to this manager so there output, the recognition results, are handled by the manager from now on. Finally, the recognition process can be started.

//create multi-modal recogniser MultimodalGestureRecogniser mmr = new MultimodalGestureRecogniser(compositeGestureSet, deviceManager); //add gesture handler(s) mmr.addGestureHandler(handler); //create multi-modal manager MultimodalGestureManager manager = new MultimodalGestureManager(mmr); //add recogniser(s) manager.addRecogniser(recogniser); //start recognising mmr.start();